A former very smart high schooler explains the basics of Linea’s inner-proof system and why lattice-based hashing is so cool.

Hey hey! I’m Emily, your friendly developer advocate, here to summarize the Linea prover for a very smart high schooler. That is, covering it at the highest level without getting into any of the actual math.

Check out our May community call, where our researcher explains his work on lattice-based hashing.

The Basics

I’m going to assume, as a very smart, crypto-native high schooler, you’ve already read our article, What is a zkEVM?, so you should be familiar with a lot of the basic concepts of the technology.

But, also I, as a former very smart high schooler, know you’ll probably skim it, so I’ve defined relevant terms in Linea’s zk glossary. The glossary is open-source, so please add to it as you see fit! (But seriously, read the article. It’s good).

Linea’s Prover Flow

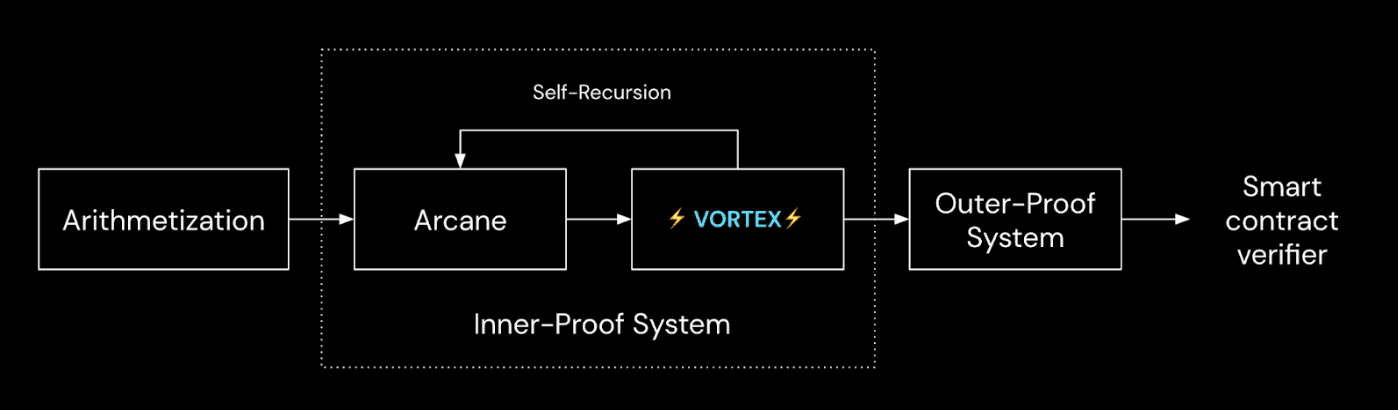

To prove a transaction happened, it has to go through a series of steps as outlined above.

The first step is called arithmetization, which, oversimplified, can be summarized as the process by which computer programs are turned into math for the zk-proof to understand.

More concretely, it is the process by which a transaction is turned into traces and a set of constraints that must be satisfied to prove that the computation is correct.

Following arithmetization, we go through an inner-proof system that recursively shrinks down the proof until we go through a final compression step in an outer-proof system.

Vortex and Arcane: Linea’s In-House Proof System

Linea’s technology is different from other zkEVMs in terms of its arithmetization scheme and inner-proof system. For the purpose of this post, we will focus on Linea’s inner-proof system: Vortex and Arcane.

To prove a transaction on Linea, we want to prove that some traces satisfy some constraints. But we have to turn the set of constraints into something more homogenous (i.e. polynomial evaluations), so that it is easier to work with the prover. We do this through Arcane, which compiles the arithmetization into an Interactive Oracle Proof (IOP) model.

An IOP is an interactive proof in which the verifier is not required to read the prover's entire message. Rather, there is an oracle – think of it as a third-party that knows what the prover knows – that the verifier probabilistically queries to gain information. As a fun side-note, we use the Wizard-IOP framework, which creates a level of abstraction that allows for more complex queries than what other standard IOP models offer.

After Arcane, instead of simply knowing whether or not traces satisfy some set of constraints, we can evaluate polynomials corresponding to the traces, which is a much better form for proofs from a mathematical point of view.

Since we do not want to rely on trusting a third-party, we use cryptographic assumptions and small iterative transformations to replace the oracle with a polynomial commitment scheme.

A polynomial commitment is a fancy hashing of the traces that is sent as part of the proof. This information allows the verifier to know whether evaluations satisfy some property and are done correctly. For Linea, this relies on lattice-based cryptography and error-correcting codes.

So, why is lattice hashing cool?

-

It is faster than the popular elliptic curve cryptography.

-

It is plausibly post-quantum (aka, resistant to quantum computing attacks. We say plausibly because these attacks don’t yet exist).

-

Lattice hashing is optimized for recursion.

-

It is efficient for hardware acceleration and SIMD parallelism (aka a class of high-performance computing architecture).

Additionally, with traditional hash functions, you have to choose between whether or not they are fast to run or friendly to use in a SNARK. However, lattice-based functions don’t have to make that tradeoff!

Now, what are error-correcting codes? It’s a tool commonly used in telecommunication as signals are often disrupted. Basically, it allows for data integrity and reliability by incorporating data redundancy, so that the receiver can identify and correct errors, even if some of the original data is corrupted or lost.

So, how does a polynomial commitment work?

Essentially, we lay out all the traces in a big rectangle of rows and columns. We encode each row of traces and apply a lattice-based hash. We have now committed to these traces and cannot change our mind.

The verifier then sends a challenge. It asks for a random linear combination of the hashed data and a random subset of the traces. And, because of probability math magic, if there is consistency between the linear combination, claimed evaluations, and selected subset of traces, the computation is true!

Vortex proof size and verifier work are O(sqrt(n)) size of the trace. It is cheaper than the trace itself, but still too big to be verified on Ethereum directly.

The final compression step: PlonK

For the final compression step, we do one final proof, the PlonK proof, to make it verifiable on Ethereum directly. And this is very fast, thanks to the SNARK-friendly properties of lattice-based hashes as well as SNARK’s ability to verify 1 transaction or 1B transactions in the same amount of time. Additionally, it generates a very small proof that we can efficiently verify on L1.

PlonK and Groth16 are zkSNARK constructions based on advanced cryptographic techniques, each widely leveraged by other zk-rollups. Linea’s outer proof system previously used Groth16, but changed it to PlonK. So, why the change?

Both Groth16 and PlonK require a trusted setup: a preprocessing phase which uses randomness to generate proving/verifying keys. What’s important to know is that the party running this preprocessing shouldn’t save these random values. Otherwise, it’d be able to create fake proofs.

So, in order to run this preprocessing phase in a “trusted” manner, we usually want multiple parties involved in the computation (MPC ceremony), such that if a single one of them is honest, we can guarantee that the random values are lost (and hence, nobody can create fake proofs).

When it comes to this pre-processing, PlonK only does it once and does not depend on the circuit. On the other hand, Groth16 must rerun the setup every time the circuit is changed.

Because Linea is still iterating on its circuit design, using Groth16 means we would have to regenerate the trusted setup and update how we do verification. This would put us in a position where our community could say:

“Since you are rerunning the setup every two weeks on your own server, there is no guarantee that you’re not cheating and have the ability to create fake proofs.”

Do note this is not exactly the same as saying PlonK is “more secure” than Groth16. Both are secure! Rather, it makes it easier for us to deploy/update contracts and give the community the confidence it needs that we can’t cheat the protocol.

Verification

Finally, at the end of our journey, we’ve created a proof to be verified by our verifier contract on Ethereum L1! The proof, state commitment, and calldata are sent to the verifier contract. If everything looks good, the new rollup state is finalized on the L1 smart contract!

Stay connected

So, there you have it! Expect to see a community call every month, where we’ll be highlighting the various researchers, community members, and key partners that make Linea possible.

And, of course, don’t forget to subscribe here, follow us on Twitter, YouTube, and Lens, and find us on Discord!